Interprocess Communication

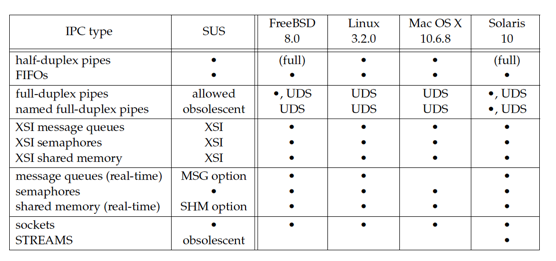

Summary of UNIX System IPC

For full-duplex pipes, if the feature can be provided through UNIX domain sockets , we show ‘‘UDS’’ in the column.

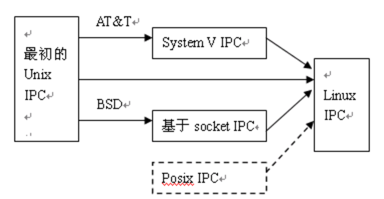

XSI IPC VS POSIX IPC

XSI IPC is earlier

XSI IPC is completely implementation.

POSIX IPC interfaces are more easy.

POSIX Semaphore has been base specification in SUSv4, message queue and shared memory are optional.

XSI IPC

The three types of IPC that we call XSI IPC—message queues, semaphores, and shared memory — have many similarities. In this section, we cover these similar features; in the following sections, we look at the specific functions for each of the three IPC types.

Identifier a IPC struct

- Internal name, Each IPC structure (message queue, semaphore, or shared memory segment) in the kernel is referred to by a non-negative integer identifier

- External name, IPC object is associated with a key that acts as an external name.Whenever an IPC structure is being created (by calling msgget, semget, or shmget), a key must be specified.

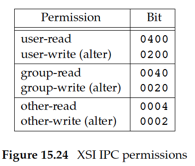

Permission

Disadvantages

- A fundamental problem with XSI IPC is that the IPC structures are systemwide and do not have a reference count. For example, if we create a message queue, place some messages on the queue, and then terminate, the message queue and its contents are not deleted. They remain in the system until specifically read or deleted by some process calling msgrcv or msgctl, by someone executing the ipcrm(1) command, or by the system being rebooted. Compare this with a pipe, which is completely removed when the last process to reference it terminates. With a FIFO, although the name stays in the file system until explicitly removed, any data left in a FIFO is removed when the last process to reference the FIFO terminates.

- Another problem with XSI IPC is that these IPC structures are not known by names in the file system. We can’t access them and modify their properties with the functions we described in Chapters 3 and 4. Almost a dozen new system calls (msgget, semop, shmat, and so on) were added to the kernel to support these IPC objects. We can’t see the IPC objects with an ls command, we can’t remove them with the rm command, and we can’t change their permissions with the chmod command. Instead, two new commands —ipcs(1) and ipcrm(1)—were added.

Advantage & Disadvantage

PIPE

Advantage:

- Easy, widely be used in shell command combination.

Disadvantage:

- Just can be used only between processes that have a common ancestor(parent - child).

- Historically, they have been half duplex (i.e., data flows in only one direction).

FIFO

Advantage:

- Named PIPE, can be used between independent process.

- The normal file I/O functions (e.g., close, read, write, unlink) all work with FIFOs.

Disadvantage:

- Need file system,

- Although the name stays in the file system until explicitly removed, any data left in a FIFO is removed when the last process to reference the FIFO terminates.

Message Queues

Advantage:

- High perfermance

Shared Memory

Advantage:

- fastest IPC

Disadvantage:

- Need synchronize mechnism

Unix Socket Domain

Disadvantage:

- communicate with processes running on the same

Example

A complete demo , combined with XSI shared memory and POSIX semaphore.

#include "apue.h"

#include <sys/ipc.h>

#include <sys/shm.h>

#include <semaphore.h>

#define SHM_PATH "/tmp/shm_x"

#define SEM_PATH "/tmp/sem_x"

#define ID 123

#define SHM_SIZE 1024

#define SHM_MODE 0600

int NUM = 50;

int

shm_init(void) {

int shmid;

key_t key;

key = ftok(SHM_PATH, ID);

if (key == -1) {

err_sys("ftok failed");

}

if ((shmid = shmget(key, SHM_SIZE, IPC_CREAT|SHM_MODE)) == -1) {

err_sys("shmget failed");

}

return shmid;

}

void

shm_rm(int shmid) {

if (shmctl(shmid, IPC_RMID, 0) < 0) {

err_sys("shmctl rm failed");

}

}

sem_t *

semlock_alloc(void) {

sem_t *sem;

sem = sem_open(SEM_PATH, O_CREAT, S_IRWXU, 1);

if (sem == SEM_FAILED) {

return NULL;

}

return sem;

}

int

semlock(sem_t *sem) {

return sem_wait(sem);

}

int

semunlock(sem_t *sem) {

return sem_post(sem);

}

void

semlock_free(sem_t *sem) {

sem_unlink(SEM_PATH);

sem_close(sem);

}

void

child_work(sem_t *sem) {

int shmid;

int *count;

int temp;

shmid = shm_init();

count = shmat(shmid, 0, 0);

if (count == (void *) -1) {

err_sys("shmat failed");

}

semlock(sem);

temp = *count;

temp++;

usleep(1);

*count = temp;

semunlock(sem);

shmdt(count);

}

int

main(void) {

int i;

pid_t pid;

int shmid;

int *count;

sem_t *sem;

pid_t pids[NUM];

shmid = shm_init();

count = (int *)shmat(shmid, 0, 0);

if (count == (void *) -1) {

err_sys("shmat failed");

}

*count = 0;

sem = semlock_alloc();

if (sem == NULL) {

err_sys("alloc semlock failed");

}

for (i = 0; i < NUM; i++) {

pid = fork();

if (pid < 0) {

err_sys("fork failed");

} else if (pid == 0) {

//child

child_work(sem);

exit(0);

} else {

//parent

pids[i] = pid;

}

}

for (i = 0; i < NUM; i++) {

waitpid(pids[i], NULL, 0);

}

//If we didn't add lock which based on semaphore, the final result will be unpredictable

printf("final count: %d\n", *count);

shmdt(count);

shm_rm(shmid);

semlock_free(sem);

exit(0);

}